Swirling Thoughts on AI, LLMs, Novel Hypothesis Generation and Crypto?

Introduction

If you are anything like me, you might find yourself routinely lost in a never-ending wave of thoughts, like a little energizer bunny that refuses to stop its tireless march. Some may find this uncomfortable, actively seeking out ways to quiet or distract their minds with exercise, meditation, or entertainment. Others find it to be their primary source of creativity or even a form of self-expression. While it’s true that this little thought engine ought not run 24 hours a day, 7 days a week (sleep being the obvious exception), I personally feel lost without it, like the “a-ha” light-bulb that illuminates the world around me has been flipped off.

The notion of sampling with a specific temperature setting in LLMs, which can be likened to the temperature of a bubbling cauldron of thoughts.

I must admit there is a spectrum to this cognitive bubbling, with clear boundaries. When the cauldron is too cold, one may present as “dull” or uninspired – thoughts moving too linearly, too predictably. As you increase the temperature, you pass through the normal range, then through an ADHD-like presentation where thoughts become less constrained by conventional connections. Too hot, and you begin to encounter formal thought disorders, such as schizotypal personality disorder, mania, and even schizophrenia, where connections become too tenuous, patterns are perceived where none exist, and reality itself begins to warp. After all, we often hear there is a fine line between madness and genius. The difference might simply be in the quality of the connections made and their ultimate utility.

All of this is to say that I spend most of my days seeking out (physically or virtually) new sweet and spicy ingredients to add to my cauldron. And I’ve begun wondering: can our AI systems engage in this same kind of productive “swirling” that leads to truly novel ideas?

Adding structure to the “swirl”

The impetus for this very blog post was the result of one of my own recent “swirls,” particularly driven by a question posed by Dwarkesh Patel to many of the leading AI experts in the world:

I still haven't heard a good answer to this question, on or off the podcast. AI researchers often tell me, "Don't worry bout it, scale solves this." But what is the rebuttal to someone who argues that this indicates a fundamental limitation?

This question gets my wheels turning. As someone deeply immersed in AI research, I find myself wondering: why can’t our models, despite consuming the entire corpus of human knowledge, make the novel connections that even moderately intelligent humans can make with far less information at their disposal? Is this even true anymore? Perhaps they can and we just haven’t deployed them with the right set of incentives, platforms, and affordances to let them “swirl” away productively?

The ingredients simmering in my mental cauldron at the time included:

- A fascinating discussion with a colleague about frontier models generating novel hypotheses that turned out to be true based on wet-lab experiments

- My work implementing GRPO (Generalized Regularized Policy Optimization) from scratch, where I noticed that generating diverse completions with high temperature settings helped explore a wider range of possibilities

- Lila.ai’s renewed interested in applying evolutionary algorithms to AI systems

- The concept of scientific reviewers as generalized verifiers of knowledge

- The future of science, scientific knowledge, knowledge graphs, and AI agents

- PaperBench: Evaluating AI’s Ability to Replicate AI Research.

- My own in-progress benchmark (RISE) for evaluating AI’s ability to evaluate scientific research.

- The provocative notion of “purchasing” scientific innovation, which I was reminded of by this x post

From this swirl emerged a research question that keeps me up at night: Can we develop a training procedure that encourages AI to efficiently explore wildly different and novel ideas that blend concepts from disciplines never associated with each other? Follow up questions include:

- How can we leverage the success of emerging reinforcement learning paradigms to do this?

- Are we even asking questions diverse enough to warrant such exploration of ideas? For example, RL paradigms are dominated by math and coding questions that can be easily verified.

- Can AI systems using this procedure to start telling us what the questions we should be asking in the first place?

Generally speaking, the questions above are tending towards a topic known as “Open-Endedness.”

The Combinatorial Challenge and Human Intuition

The most glaring challenge facing AI in novel idea generation is that of combinatorial explosion. As the number of concepts and ideas increases, the number of possible combinations grows exponentially, making it impossible for even the most powerful AI to explore all possibilities.

This is precisely where human “intuition” gives us an edge. That hard-to-define quality allows humans (particularly those often labeled as “geniuses”) to seemingly pluck the unlocking idea or concept out of thin air, almost as if it were a gift handed down from a higher power.

But what is intuition, really? I sometimes call it “basal ganglia learning” – those habits or tendencies formed subconsciously that drive behaviors and neural activity giving rise to our conscious thoughts. It’s well-tuned through evolution, through our reward systems and pattern recognition. During my undergraduate years studying organic chemistry, I used to joke, “I don’t know orgo, but my basal ganglia does!” After solving countless problems, my intuition knew where to look before my conscious mind did.

This intuition allows humans to more intelligently explore the space of possible connections between concepts. We don’t try all combinations; somehow, we know which ones are worth exploring. Can we build this capability into our AI systems?

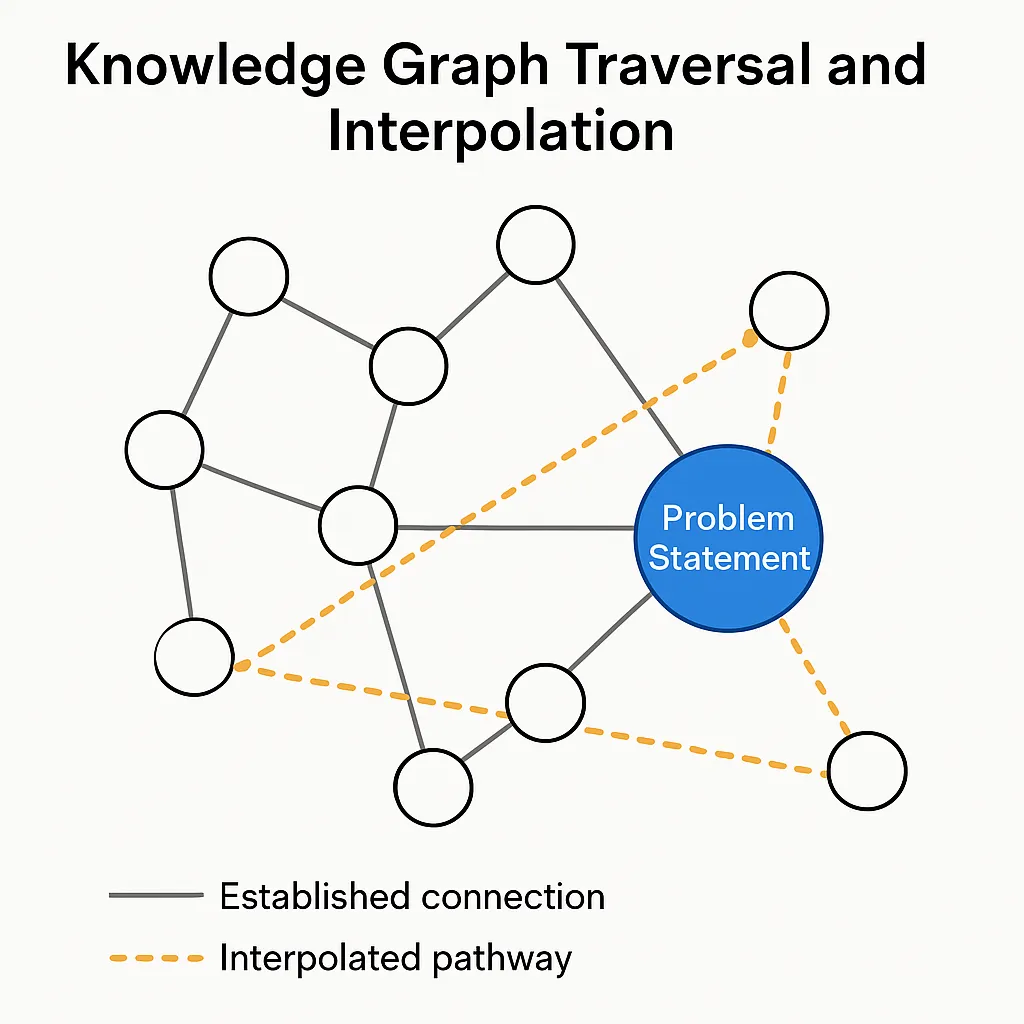

Knowledge Graph Traversal and Interpolation

One approach I’ve been contemplating involves knowledge graph traversal and interpolation. Imagine each node in a vast knowledge graph as a Wikipedia article with its supporting references. The edges represent established connections between concepts.

Novel idea generation might then be formulated as interpolating between intelligently selected nodes in this knowledge graph – finding pathways that have never been explicitly traveled before.

Knowledge Graph Traversal and Interpolation: A sketch of the algorithm for generating novel hypotheses.

This approach combines human intuition with machine-like high-throughput processing. While it’s not feasible to explore all possible combinations of nodes, even for machines, it’s well accepted that the cognitive throughput of humans is incredibly limited compared to what could be achieved by horizontally scaling thousands of AI model replicas.

As Dario Amodei likes to say, imagine “ten thousand geniuses in a data center.” But what will all these geniuses actually do? How will we put them to work?

The natural answer is to have them pursue science and medicine – fields where novel connections could lead to breakthrough discoveries. Here’s an algorithmic sketch for traversal and interpolation:

Input: Problem statement P, Knowledge Graph KG, Exploration ratio E

Output: Novel hypothesis H

1. Initialize:

- R = Set of directly relevant nodes to P in KG

- C = Confidence threshold for accepting a hypothesis

- M = Maximum path length for exploration

2. Select seed nodes:

- N_relevant = Top-k most relevant nodes from R based on semantic similarity to P

- N_random = Sample nodes from KG with probability inversely proportional to their connection to R

- ReRank these samples based on AI's estimated utility in solving problem P

- N_seed = N_relevant ∪ N_random with ratio determined by E

3. For each pair of nodes (n_i, n_j) in N_seed:

- Generate all possible paths between n_i and n_j with length ≤ M

- For each path:

* Calculate novelty score based on:

> Frequency of co-occurrence of nodes in existing literature

> Distance between domains of the nodes (using domain classification)

> Number of interdisciplinary boundaries crossed

* Rank paths by novelty score

- Select top-N paths by novelty score

4. For each selected path:

- Prompt the AI model to reason through the path:

* "Consider concepts A, B, C... connected through relationships r_AB, r_BC...

How might these relate to problem P?"

- Generate hypothesis H_i

- Calculate confidence score C_i for H_i

5. Filter hypotheses:

- Retain H_i where C_i > C

- Rank remaining hypotheses by combination of novelty and confidence

6. Return highest-ranked hypothesis HThe key insight here is that we’re not just asking the AI to regurgitate known connections – we’re forcing it to consider both related and seemingly unrelated concepts simultaneously, mimicking the way human intuition jumps across domains.

Verification and Training Dynamics

The model’s training dynamics should follow a cycle inspired by the scientific method:

- Hypothesis generation: Model proposes novel connections between concepts

- Prediction: Model predicts what we should observe if the hypothesis is true

- Verification: External validation (through experiment or expert review)

- Reinforcement: Adjust connection weights in the knowledge graph based on verification outcome

- Propagation: Update related hypotheses based on new information

This creates a dynamic knowledge graph that evolves based on verification outcomes. Connections that lead to verified hypotheses become stronger, while those leading to falsified hypotheses become weaker.

The key innovation here is that the model is explicitly trained to maximize both novelty and correctness, rather than just predictive accuracy on existing data. This addresses the fundamental issue that traditional ML approaches tend to converge toward the status quo rather than exploring novel territories.

Tracking Progress Through Decentralized Verification

Tracking progress in AI systems’ ability to generate novel hypotheses requires a robust, transparent, and tamper-resistant system. This is where blockchain technologies offer a compelling solution – not just as an afterthought but as a foundational component of the verification framework.

A blockchain-based system for tracking AI-generated hypotheses could:

- Create immutable records of hypotheses generated, including timestamps and the specific version of the AI that generated them

- Track verification attempts through a decentralized network of validators (both human experts and automated systems)

- Establish reputation systems for both hypothesis generators and verifiers

- Tokenize scientific contributions to create economic incentives for verification

The system would work through several tiers:

Tier 1: Retrospective Discovery (Blockchain as Audit Trail)

For the retrospective discovery track, a blockchain provides an immutable record of what the AI “knew” at the time of hypothesis generation. By restricting the AI’s training data to pre-2000 medical literature, for example, and recording this restriction on-chain, we create a verifiable claim that any post-2000 discoveries predicted by the AI were genuine predictions rather than regurgitations.

Smart contracts could be designed to automatically reward the system when it successfully “predicts” historical discoveries without having been exposed to them. These rewards could flow back to refine the hypothesis generation algorithm.

Tier 2: Immediate Validation (Decentralized Verification)

For hypotheses that can be immediately validated through existing data analysis, a decentralized network of validators could be established. Each validator (human or AI) stakes tokens on their verification of the hypothesis, creating a weighted consensus.

The blockchain records both the hypothesis and all verification attempts, creating a transparent record of the scientific process. This approach has several advantages:

- It prevents cherry-picking of results

- It discourages publication bias (where only positive results are reported)

- It creates economic incentives for rapid verification

- It establishes a reputation system for both hypothesis generators and verifiers

Tier 3: Long-term Breakthroughs (Tokenized Scientific Futures)

For long-term, paradigm-shifting discoveries, we could implement a scientific futures market on the blockchain. When an AI generates a hypothesis that could lead to a major breakthrough, the system would:

- Mint a unique token representing the intellectual property rights to that hypothesis

- Allow market participants to buy and sell shares in that token based on their assessment of its validity and importance

- Distribute rewards to token holders if and when the hypothesis is ultimately verified

This creates a prediction market for scientific breakthroughs and allows the community to “vote with their wallet” on which AI-generated hypotheses are most promising. It also creates a direct economic mechanism for funding the pursuit of these hypotheses.

Measuring Progress Through Token Economics

The aggregate market capitalization of hypothesis tokens becomes a measure of the perceived value of AI-generated science. As AI systems improve in their ability to generate valuable hypotheses, the total value of the token ecosystem should increase, creating a direct economic feedback loop.

This approach also addresses a critical challenge in scientific funding: the allocation of resources to high-risk, high-reward research. By tokenizing hypotheses, we create a mechanism for spreading risk across many stakeholders, potentially unlocking funding for explorations that would be too speculative for traditional grant mechanisms.

Example of Swirling Disparate Ideas: AI + Science + “Crypto” = DeSci

This brings us directly to the emerging DeSci (Decentralized Science) movement, which represents a fascinating real-world example of the cross-domain fertilization I’ve been discussing. By combining concepts from artificial intelligence, scientific methodology, and blockchain economics, DeSci aims to transform how scientific research is funded, conducted, and verified.

In the last year or so, I’ve been independently exploring the intersection of AI for Science and cryptocurrency technologies, envisioning what novel ideas could emerge from combining these seemingly unrelated fields. To my surprise, I discovered a growing community already working in this space under the banner of “DeSci” (Decentralized Science). By combining concepts from artificial intelligence, scientific methodology, and blockchain economics, DeSci aims to transform how scientific research is funded, conducted, and verified.

This is a perfect example of the kind of cross-domain fertilization I’ve been discussing. “Hardcore” AI researchers at frontier labs tend not to interact much with the crypto community - These spaces traditionally don’t attract the same types of people. Discussions around crypto and decentralization are minimal within AI circles, largely because the most obvious application – decentralized training – is considered computationally impractical due to network latency and bandwidth constraints, at least for pre-training. How this plays out in the post-training reinforcement learning era remains an open and fascinating question.

It’s my impression that the intersection between self-proclaimed hardcore scientists and fervent crypto enthusiasts is nearly an empty set. These spaces traditionally don’t attract the same types of people, and the intersection is indeed very small.

Yet, there’s something compelling about the vision of a decentralized, blockchain-based network whose purpose is to shepherd the human scientific enterprise into the age of artificial intelligence, with token value derived directly from the technological innovation surfaced from the network itself.

By leveraging digital incentives and rewarding peers – both humans and AI agents – directly for their contributions of computational resources, expertise, and innovation, such a network could establish a verified and transparent ecosystem for scientific exchange. Collaboration between humans and AI could be facilitated on top of a decentralized knowledge graph (dKG) grounded in existing scientific literature.

Mining-derived compute could drive multi-modal AI inference governed by open-source scientific-agent protocols, allowing them to engage in the scientific method. This decentralized fleet of agents would serve as stewards of scientific knowledge, with incentives for maintaining information consistency and complete on-chain transparency. Verification attempts, whether by humans or other AI systems, would also be recorded, creating a comprehensive audit trail.

In the long term, such a marketplace would be well-positioned to serve as a universal information trading post between humans, virtual AI scientists, and perhaps even embodied AI robots, where observations, research questions, testable hypotheses, and experimental validations are exchanged.

Conclusion: The Future of Thought

As our AI systems become more sophisticated, the question of whether they can engage in the kind of “swirling thoughts” that lead to human creativity becomes increasingly relevant. I believe the answer is yes – but it will require intentional design that mimics the non-linear, associative nature of human creativity while leveraging the unique strengths of machine computation.

The combination of knowledge graph traversal, forced interpolation between disparate concepts, verification mechanisms, and reward systems modeled on scientific discovery could lead to AI systems capable of generating truly novel hypotheses.

And perhaps, in the process of designing these systems, we’ll gain deeper insights into our own thought processes – how that mysterious bubbling cauldron of human creativity actually works.

The greatest irony would be if our attempt to create machines that think like humans ends up teaching us the most profound lessons about what it means to think at all.